News Details

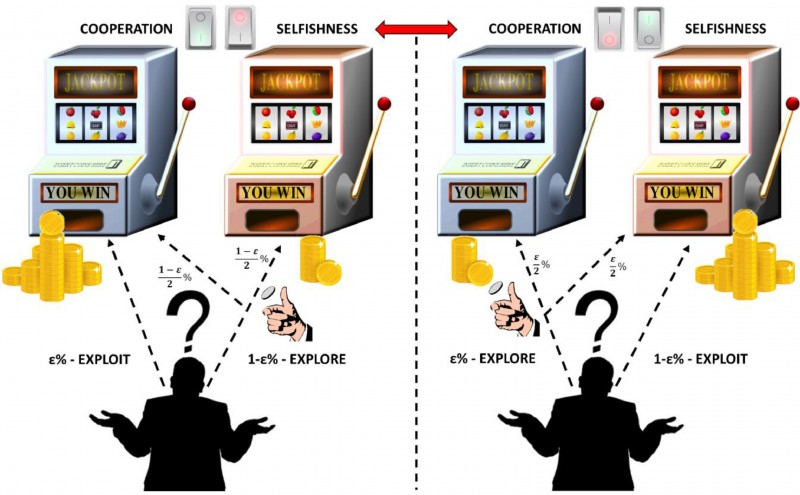

The multi-armed bandit model as a slot machine game

2020-11-10

A multi-armed bandit algorithm speeds up the evolution of cooperation

Most evolutionary biologists consider selfishness an intrinsic feature of our genes and as the best choice in social situations. Yet current research on the evolution of cooperation is based on rules that assume that payoffs are known by all engaging parties and do not change through time. KLI fellow Roberto Cazzolla Gatti challenges fundamental assumptions to create a new theoretical framework for the evolution of cooperation.

Most evolutionary biologists consider selfishness an intrinsic feature of our genes and as the best choice in social situations. During the last years, prolific research has been conducted on the mechanisms that can allow cooperation to emerge “in a world of defectors” to become an evolutionarily stable strategy.

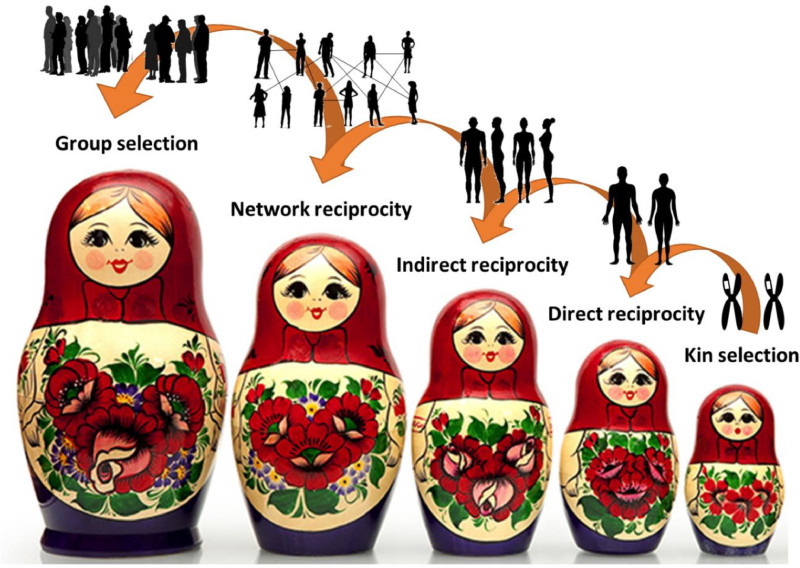

Here's the problem. Current research on the evolution of cooperation is based on five main rules that assume that payoffs are known by all engaging parties and do not change through time: kin selection, direct reciprocity, indirect reciprocity, network reciprocity, and group selection. Yet these assumptions deeply constrain the possibilities for genuine cooperation. Following any of the five rules blindly, individuals risk getting stuck in unfavorable situations instead of enjoying the mutual benefits of cooperation.

Challenging the basic assumptions underlying those five rules, Roberto Cazzolla Gatti developed a multi-armed bandit (MAB) model that may be employed by individuals to opt for the best choice most of the time, thus accelerating the evolution of the altruistic behavior.

The MAB model is a classic problem in decision sciences. A common MAB model that applies extremely well to the evolution of cooperation is the epsilon-greedy (ε-greedy) algorithm. Through this decision-making algorithm, cooperation evolves as a process nested in the hierarchical levels that exist among the five rules.

The interacting layers of the evolution of cooperation are like a matryoshka: nested one within another.

The gist: to study the evolution of cooperation, biologists can follow a Matryoshka ε-greedy model in which kin selection is the smallest doll (lowest level) and group selection the biggest one (highest level). We first examine kin selection to look for an explanation for the evolution of cooperation, but if this does not seem the likely reason of an altruistic act, we should move forward, at a higher level, to include other ecological, evolutionary, and developmental (Eco-Evo-Devo) variables relevant to explain a specific interaction through the epsilon-greedy algorithm.

This reinforcement learning model provides a powerful tool to better understand and even probabilistically quantify the chances of cooperation evolution.

Read the full paper here.

Gatti, Roberto Cazzolla. "A multi-armed bandit algorithm speeds up the evolution of cooperation." Ecological Modelling 439: 109348. https://doi.org/10.1016/j.ecolmodel.2020.109348